For your poolside reading, go ahead and add Generative Deep Learning to the summer reading bag. It’s not a legal thriller or self-help book, but it will improve your thinking for fall policy discussions! Policymakers may find that for less than $50, it could be worth the time and money to better understand how generative AI works under the hood.

The first part of the book explains the neural networks, weights, and probability analysis used by learning models to output prediction estimates. A good way to describe this first part of the book is the question, “Is this image a picture of a chihuahua or muffin?” For people who remember AI discussions ten years ago, this was the canonical example of artificial intelligence. Economists with training in econometrics will likely be quick studies of this section of the book. For any data scientists who have dabbled in Kaggle competitions, this section of the book explains the stacking and dropping layers to discover weights that are used to get make predictions.

The second part of the book goes into the “generative” mechanics that have made this wave of AI innovation such a paradigm-shift. Teaching machines how to paint and compose words, images, and audio has been the work of computer scientists over the last ten years with rapid consumer use in the last two.

How Generative AI Works

The book includes updates as of publication in May 2023, such as Midjourney, Dall.E 2, and Stable Diffusion. It’s been a year already since this book’s publication and in May 2024, these chapters may already be dated. But even as a May 2023 edition, it explains a lot of why current generative AI models are so powerful. It also explains large multimodal models such as text-to-image models, such as ControlNet, which is built on a Stable Diffusion model with fine-grained control of output. The figure below shows the ability of the model to change the image based on textual descriptions and prompts.

Source: Lvmin Zhang, ControlNet (Foster, page 403, Fig. 14-6)

Another interesting section of the book describes so-called “world models” for reinforcement learning, which is from research that was first published in 2018.[1] Reinforcement learning is one of three major branches of machine learning, which also includes supervised learning (using labeled data) and unsupervised learning (using unlabeled data).

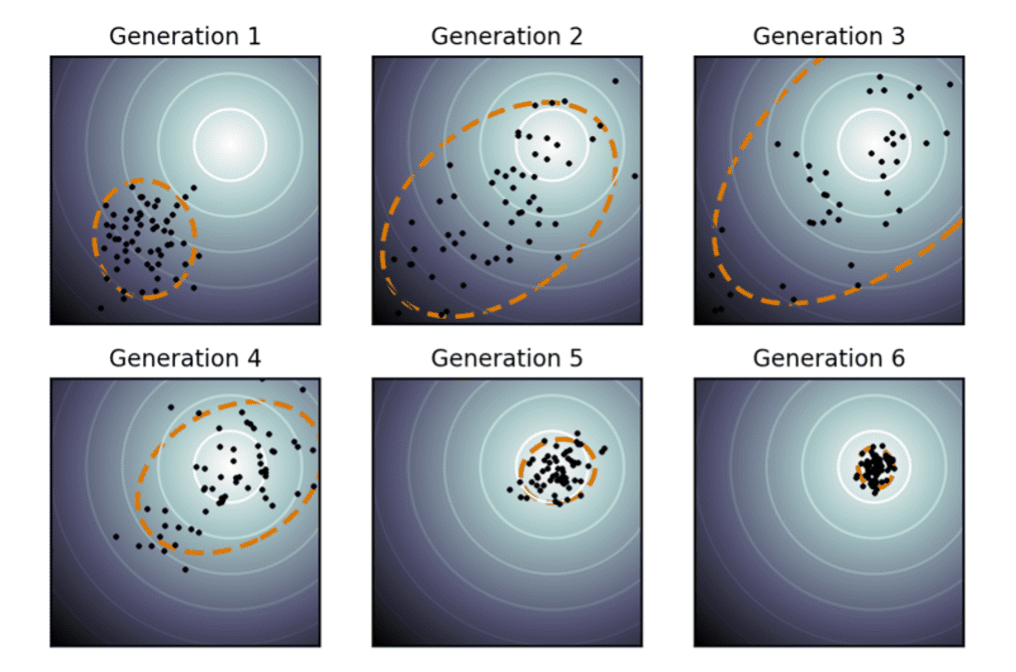

The training process in reinforcement learning is behind many of today’s generative AI applications. For example, the book shows readers the inner workings of a world model and the iterative and statistical evolutionary strategy for making predictions.

Source: CMA-ES, Wikipedia (Foster, Fig. 12-14, page 351)

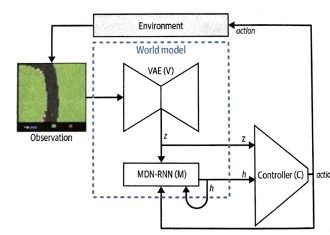

The world model discussion may be reminiscent of computer science materials from the 1990s, and that’s because the basics are the same with a Model-View-Controller (MVC) software design architectures. Each part of the world model is trained separately. The figure below is an example from the book of the view, the model, and the controller. The training process in reinforcement learning allows models to train themselves on new tasks based on their understanding of the environment.

Source: Foster, Fig. 12-3, p. 336

The book also includes a chapter on generative music, explaining how music generation is a sequence prediction problem. Economists will recognize the autoregressive models from time series analysis that are applied here in treating musical notes as data sequences of tokens. Notes and durations of notes are tokenized or parsed to create training datasets.

Source: Foster, p. 305, Fig. 11-5.

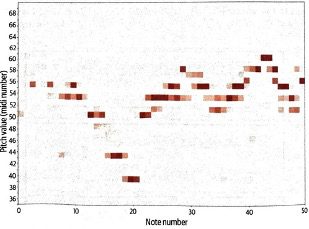

Source: Foster, p. 310, Fig. 11-9.

The inputs and outputs in a “musical Transformer model” are chunked in input windows of various sizes in the diagram. A width window of 4 sixteenth notes is illustrated. The next diagram shows how distributions of notes over time are collected and shown in a heat mat. The next note is predicted based on the training data.

How Much Do Policymakers Need to Know?

How much do policymakers need to know about how generative AI works? On the one hand, knowing how generative AI works is critically important for policymakers. As innovation moves forward and resources move with it, policymakers would do well to have a baseline understanding of the machinery in generative AI in order to direct institutional expertise to the policy questions around these new technologies.

On the other hand, understanding many of the details probably isn’t necessary for most policymakers. Do policymakers really need to understand how electricity or nuclear power is made? Isn’t the job rather to keep watch on Congressional lawmaking, administrative law, and separation of powers? Institutions such as the courts, Congress, and federal agencies are filled with people who are asking the questions, collecting the data, and hearing from experts in order to interpret the impacts of AI on society. The people who make decisions in these institutions should have a basic understanding of technology proportionate to the task at hand.

That optimal level of understanding is somewhere between competent and expert. Reading materials such as this accessible guide to Generative Deep Learning may just be what you need to keep up this summer!

[1] David Ha, Jurgen Schmidhuber, World Models (2018), https://arxiv.org/abs/1803.10122.

Sarah Oh Lam is a Senior Fellow at the Technology Policy Institute. Oh completed her PhD in Economics from George Mason University, and holds a JD from GMU and a BS in Management Science and Engineering from Stanford University. She was previously the Operations and Research Director for the Information Economy Project at George Mason School of Law. She has also presented research at the 39th Telecommunications Policy Research Conference and has co-authored work published in the Northwestern Journal of Technology & Intellectual Property among other research projects. Her research interests include law and economics, regulatory analysis, and technology policy.