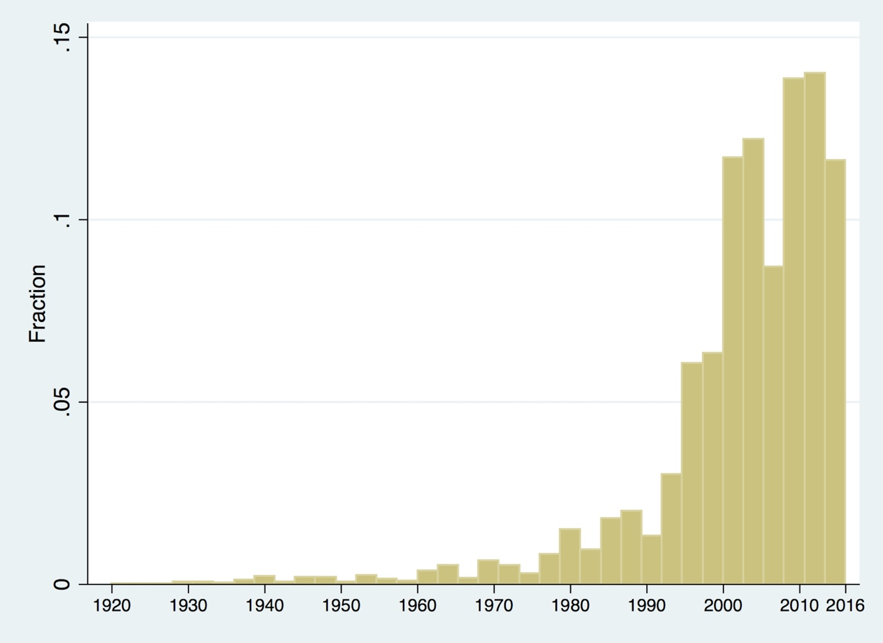

Recently, movie director Brett Ratner said that Rotten Tomatoes, a site that aggregates both professional critics’ and audience reviews, is “the destruction of our business.” To test his claim, I construct a database that includes Rotten Tomato scores for almost 2,000 movies and IMDB audience scores for over 4,000 movies released between 1925 and 2016, although the majority of movies in the dataset were released between 2004 and 2016.

I find that, all else equal, each additional percentage point a movie is rated “fresh” (as opposed to “rotten”) on average on Rotten Tomatoes is correlated with about $1.12 million in additional worldwide revenues while an additional point on IMDB (on a scale of 1 to 10) is associated with an additional $38 million in worldwide revenues.

Mine is not the first analysis testing Ratner’s claim. Recently, another study found no correlation between Rotten Tomato reviews and movie revenues. That study looked at the overall correlation between reviews and revenues, but did not control for other factors that affect revenues, like the movie genre, rating, and other factors that affect revenues. That approach confirms that reviews are likely not the key factor determining revenues, but cannot identify whether reviews have incremental effects.

The data I use come from three sources. I scraped rottentomatoes.com for its “tomatometer” scores. Rotten Tomatoes states that the tomatometer “represents the percentage of professional critic reviews that are positive for a given film or television show.” IMDB data include score, genre, and rating and come from the “IMDB 5000 Movie Database” hosted on Kaggle.com and compiled by user chuasun76. The IMDB ratings are the average score, which can range from 1 to 10, submitted by users of IMDB.com. Movie revenues are available at “The Numbers.” Budget and release date were available on both the IMDB and Numbers datasets. Budget and revenue are provided in nominal dollars, so I converted them to constant 2016 dollars using the GDP deflator.

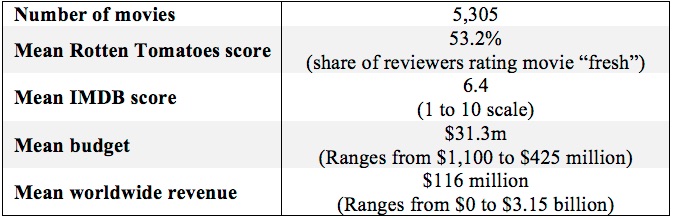

Summary data is at the bottom of this post for those who are interested.

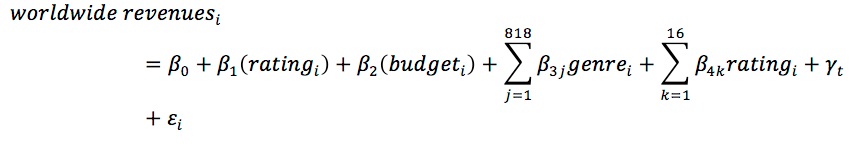

To estimate the effects of ratings, I estimated the following multivariate regression:

Where i indicates the movie and ratingi is either its Rotten Tomatoes or IMDB score, and budgeti is its production budget. The IMDB 5000 database combines genres, yielding 818 unique genre combinations. The data include 16 total ratings, since some were rated for television, although almost 90 percent are rated R, PG-13, or PG . Because different genres and ratings may affect expected revenues, the regression estimates fixed effects for those, as well as year fixed effects to control for differences in economic and other conditions over time. Revenues are converted to 2016 real dollars.

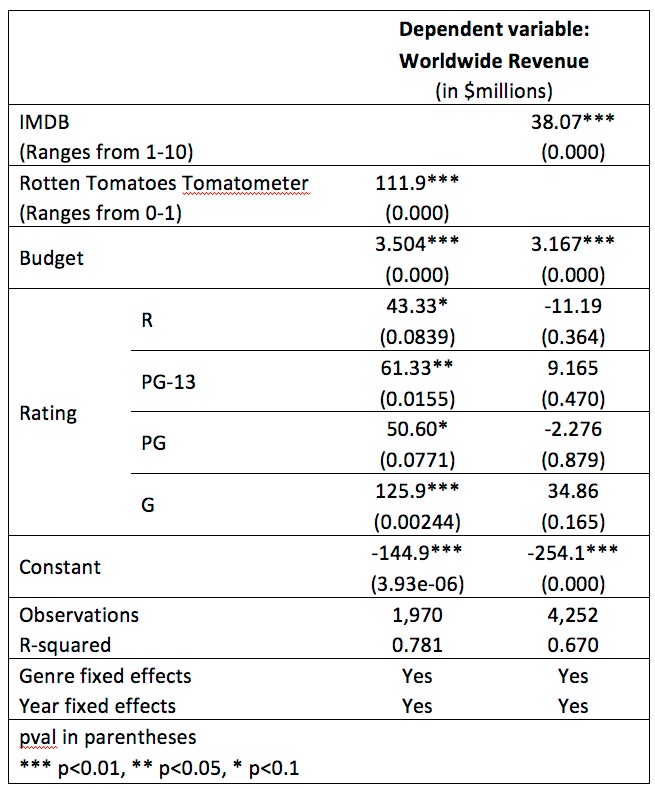

The table below shows the results of estimating this equation.

The table shows statistically significant and economically meaningful results on the ratings coefficient. The results imply that a one percentage point increase in a movie’s tomatometer score is associated with a $1.12 million increase in worldwide revenues. Thus, all else equal, a movie rated 60 percent “fresh” would earn about $11.2 million more than a movie rated 50 percent “fresh.” Similarly, all else equal, a movie rated 6.0 on IMDB would earn about $38 million more than a movie rated 5.0. Given that mean worldwide revenues are about $116 million, those are not small numbers.

These results, of course, do not imply that Rotten Tomatoes and IMDB are hurting ticket sales. For one thing, while bad reviews are associated with lower revenues, good reviews are associated with higher revenues. If one assumes causality runs from ratings to revenues, then these reviews can both amplify and depress revenues.

But it’s more likely that the direction of causality is not so simple. In reality, consumer reviews reflect critic and audience preferences, and in general critics and audiences don’t like bad movies. Bad movies get bad reviews and low revenues, and vice-versa.

Studios should not shun sites like Rotten Tomatoes that aggregate preferences. Instead, in today’s data-driven world they should embrace every possible source of information regarding audiences’ likes and dislikes. In other words, someone should tell Ratner to exploit the messenger, not shoot it. Perhaps by embracing the data he could direct more movies like Night Will Fall (perfectly fresh at 100% on Rotten Tomatoes) and fewer like Movie 43 (abysmally rotten at 4%).

Summary Data

Share of Movies by Year Released