The Trump Administration promises a “golden age” of American artificial intelligence leadership but is attacking the science and global connections that would make it happen. This disconnect reveals a fundamental misunderstanding of how breakthroughs occur and threatens the historically bipartisan foundations of the innovation it seeks to champion.

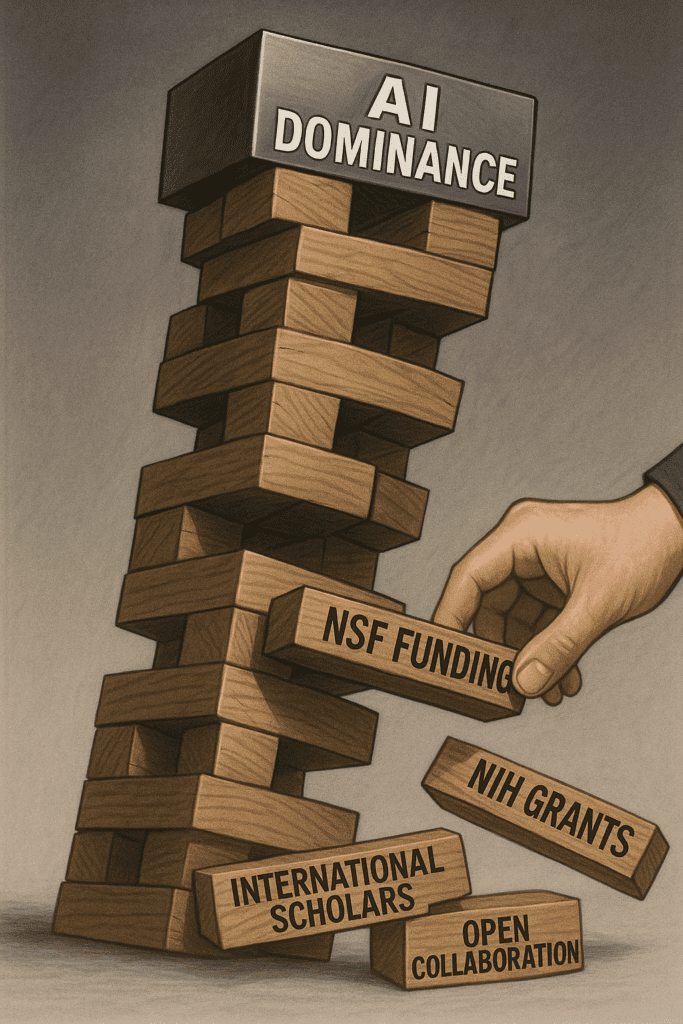

The newly released AI Action Plan says the Administration wants to “advance the science of AI” through federal investment. But with tech companies expected to spend over $300 billion on AI development in 2025 alone, it’s unclear why additional government investment is needed. More troubling, while increasing funding for AI-related research, the White House and the House of Representatives are proposing slashing support for other science. They aim to reduce National Science Foundation and National Institutes of Health budgets 55 and 37 percent, respectively.

Artificial intelligence didn’t spring from targeted research. It grew out of fields nobody initially associated with AI. Reinforcement learning, for example, originated with psychologist B.F. Skinner’s experiments training animals with rewards and punishments. Transformer technology, which underlies today’s language models, draws on cognitive psychology research dating back to the 19th century. And the breakthrough that let neural networks “teach themselves” started as economic research to understand complex systems by Paul Werbos at Harvard, funded by DARPA. Werbos later led an NSF program that supported the kinds of research that would go on to spark the deep learning revolution.

If the Nixon or Ford Administrations had imposed science funding constraints like the Administration would like to impose, we might not have today’s AI at all.

The real value of government science funding comes from supporting research that creates broad public benefits but lacks obvious commercial payoffs. This seems to be precisely the kind of work now on the chopping block. I’m not against public support for AI. Smart government support is essential. But it should fill gaps the private sector won’t, and not come at the expense of other scientific fields that may hold the seeds of tomorrow’s breakthroughs.

Threats to innovation go beyond funding. Science thrives on openness, and American innovation has always relied on collaborating globally and attracting the world’s best minds. For decades, this openness and magnetic appeal gave America an enormous competitive advantage. Foreign-born researchers represent well over a third of Nobel Prizes won by scientists at American institutions since 2000.

Additionally, many advancements underlying the digital economy were culminations of innovation around the world. Consider fiber optic communications. British physicist Charles Kao’s theoretical work on transmitting light through glass fibers seemed purely academic. American telecommunications companies developed his insights into the fiber optic networks, which not only stream data to billions of people around the world, but also allow the data centers that power AI to function.

Or take deep learning. While at Princeton, Chinese-American computer scientist Fei-Fei Li created a collection of millions of labeled images, which became the ImageNet database. This database became the foundation of the ImageNet Challenge, an annual competition that tested AI algorithms on image recognition tasks. Deep learning’s key breakthrough was arguably the 2012 competition, where a neural network developed by Canadian graduate student Alex Krizhevsky won by a dramatic margin. Researchers subsequently built on Krizhevsky’s breakthrough to create the computer vision systems that power everything from autonomous vehicles to medical diagnosis.

Yet the Administration is throwing sand in the gears of this innovation engine through its hostility to international talent, including new visa restrictions, heightened scrutiny of foreign scholars, and policies that chill collaboration.

The Administration’s AI Action Plan trumpets dominance, but its policies undermine what made America an innovation powerhouse in the first place. By defunding science not targeted at AI, duplicating what the private sector is already doing, and alienating global talent, America isn’t just sacrificing its edge. It’s handing the AI future to competitors willing to invest in science rather than slogans.

Scott Wallsten is President and Senior Fellow at the Technology Policy Institute and also a senior fellow at the Georgetown Center for Business and Public Policy. He is an economist with expertise in industrial organization and public policy, and his research focuses on competition, regulation, telecommunications, the economics of digitization, and technology policy. He was the economics director for the FCC's National Broadband Plan and has been a lecturer in Stanford University’s public policy program, director of communications policy studies and senior fellow at the Progress & Freedom Foundation, a senior fellow at the AEI – Brookings Joint Center for Regulatory Studies and a resident scholar at the American Enterprise Institute, an economist at The World Bank, a scholar at the Stanford Institute for Economic Policy Research, and a staff economist at the U.S. President’s Council of Economic Advisers. He holds a PhD in economics from Stanford University.